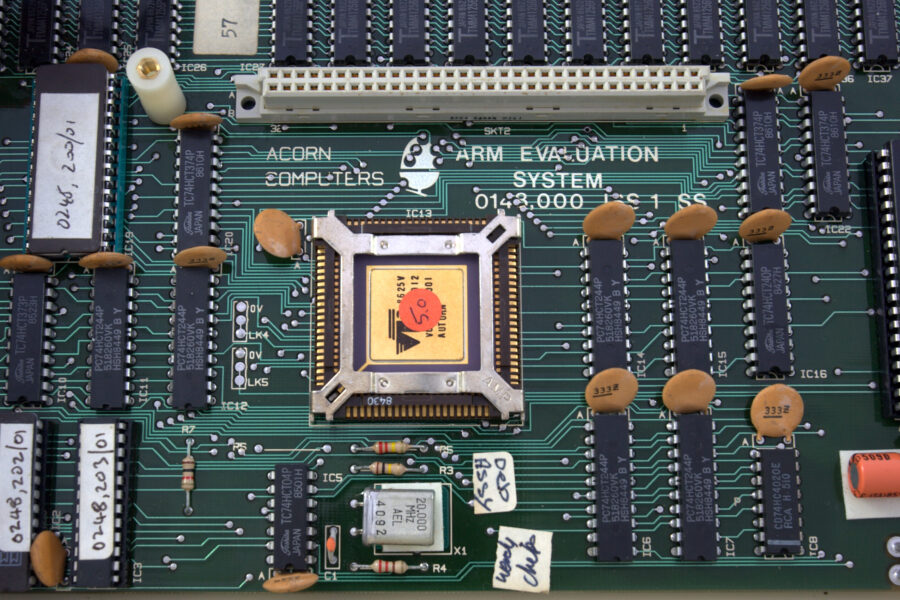

Main image: ARM1 2nd processor for the BBC Micro. Photograph by Peter Howkins

April 2025 marks the 40th anniversary of the development of the first Arm microchip. Born out of a need to power the company’s predecessor, Acorn Computers’ latest microcomputer models in the mid-1980s, the ARM1 chip was the beginning of one of the UK’s most successful tech stories.

Since then Arm has produced an estimated 250 billion chips, used worldwide in devices such as computers, laptops smartphones and sensors to vehicles and huge datacentres.

The story began in 1978 when Chris Curry and Hermann Hauser founded Acorn Computers, and attending one of its first meetings was British computer scientist and engineer, Steve Furber CBE, then working on his PhD at Cambridge University, who would co-design the original chip.

Related Content: Acorns to Oaks: five personal stories behind Acorn Computers and Arm

This was a result of Acorn’s success building microcomputers, most notably the BBC Micro, which used a 8-bit microprocessor, and Furber, interviewed by Archives of IT in 2018, said the obvious next step was to make the computers more powerful by using 16-bit microprocessors and then 32-bit.

“It was driven by what was becoming known as Moore’s Law. The number of transistors you could put on a chip was doubling every 18 months to two years, so the amount of functionality you could put on there was growing at that rate and going from eight to 16 bits was a natural progression.”

However, they looked at all the 16-bit processors that could be bought off the shelf at the time and they didn’t like any of them.

“Their real-time performance was very poor and they could not make full use of the available memory bandwidth,” he said. “We had done several tests on a few microprocessors that drove us to the conclusion that the thing that determined the performance of a computer more than anything else was the computer’s ability to access memory bandwidth.”

Reduced instruction set computer (RISC)

So, in 1983 Acorn decided they would design their own microprocessor around the reduced instruction set computer (RISC) following Hauser sharing published papers on the concept from Berkeley and Stanford universities.

Hauser tasked Sophie Wilson, who had designed the Acorn System 1, to work on the instruction set design and Furber created the microarchitecture. “There were other factors, such as we had employed some experienced chip designers but didn’t really have anything for them to do. So, when I sketched a microarchitecture, they were there waiting, and they took the microarchitecture specs and did the silicon implementation.”

Acorn RISC Machine (ARM)

Within 18 months, on 26 April 1985, they had the first working ARM chip, which would power the new Acorn Archimedes computer systems. Their invention used just 25,000 transistors on three-micron technology. They called it the Acorn RISC Machine (ARM), later changed to the Advanced RISC Machine. Furber and the engineers at Acorn found that the ARM had a remarkable property: it used hardly any electricity to run, far less than the CISC microprocessors on the market. This would make it ideal in the years to come for mobile devices, especially the smartphone.

“The small team’s vision was to make high-performance, power-efficient computing accessible to all,” said Arm in a statement on its 40th anniversary. “Born out of simplicity, elegance and parsimony, the architecture laid the groundwork for a new era of efficient, scalable technology. What began as an ambitious project in a small corner of Cambridge, UK, has grown into the world’s most widely adopted computing architecture, now powering billions of devices.”

Arm has continued to be at the forefront of chip development and last year it was reported it was working on designing artificial-intelligence (AI) chips.