Main image: The Cray XC40 supercomputer. The Met Office currently has three XC40s, installed in 2016, two that carry out operational modelling and a third that is dedicated to weather and climate research. All photographs courtesy of the Met Office. Words: Adrian Murphy

One of the biggest challenges of the 21st century is climate change, and the Met Office is at the forefront of critical weather services, world-leading climate science and the use of supercomputers.

That is why our industry theme for June 2023 is Supercomputers and the Met Office, where we have been rediscovering four interviews AIT conducted with the UK’s national weather service between August and September 2022.

Through these personal accounts with Archivist, Catherine Ross, Hybrid Cloud Solutions Architect, Martyn Catlow, Technology Director and Chief Information Officer, Charlie Ewen and IT Fellow, Chris Little we get an encapsulating story of not only the Met Office and its history but also the history of computing

Electronic Communication and numerical modelling of the atmosphere

Catherine Ross, Met Office Archivist, who has responsibility for the maintenance and safekeeping of all the collections, including an analogue archive and digital library and archive explains in her interview the history of the creation of worldwide meteorological offices from an idea of a US Navy Lieutenant – Matthew Fontaine Maury – that led to an international conference in Brussels in 1853.

The following year the Meteorological Department was established by the Department of Trade.

It was the electric telegraphy system that allowed the first storm warning services (now known as the iconic shipping forecast) to be set up as it allowed the free flow of data from one location to another to facilitate weather forecasting. The first was sent in February 1861 and was followed by the public weather forecast that August.

Catherine has been able to discover fascinating information that expands our knowledge of the history of computing such as the fact that data analysis for the Met Office was completed by people carrying out calculations and were known as computers from the 1860s onwards, long before the days of programmable digital computers.

In 1922 physicist Lewis Fry Richardson (who from 1913 had been in charge of the Met Office’s Eskdalemuir observatory in Scotland) published his book, Weather Prediction by Numerical Process, that demonstrated basic equations for Numerical Weather Prediction using digital computers several decades before their invention.

“In his book, he is describing how a computer could work, decades before the advent of a computer … he’s describing the master-slave relationship of a supercomputer with multiple nodes. He’s got the idea in his head long before anybody had a computer that could even get close to what he was trying to do. His basic equations are still there at the bottom of the models today, because they’re the basic equations of thermodynamics.”

It would be 1948 before a computer meeting was set up between Met Office and Imperial College to discuss ‘the possibilities of using electronic computing machines in meteorology’.

Related Content: For more information on the history of the Met Office check out our timeline

Microsoft and the cloud

The Met Office has been consistently demanding more from computers since it was one of the first users of the Leo I in 1951 to its use of today’s most powerful supercomputers such as the Cray XC40.

The Met Office currently has three Cray XC40 supercomputers, installed in 2016, two that carry out operational modelling (also acting as back up for each other) and a third that is dedicated to weather and climate research.

But it hasn’t stopped there: In April 2020 the Met Office announced it would build the world’s most powerful weather and climate forecasting supercomputer in the UK in partnership with Microsoft.

It plans to use Microsoft ‘s cloud computing services – a huge departure from historically managing its own services – and integrate Hewlett Packard Enterprise Cray supercomputers. The aim is for the supercomputer to run on 100% renewable energy and will have more than 1.5 million processor cores and more than 60 petaflops – or 60 quadrillion (60,000,000,000,000,000) calculations per second.

A statement at the time said: “This new supercomputer – expected to be the world’s most advanced dedicated to weather and climate – will be in the top 25 supercomputers in the world and be twice as powerful as any other in the UK.

“The data it generates will be used to provide more accurate warnings of severe weather, helping to build resilience and protect the UK population, businesses and infrastructure from the impacts of increasingly extreme storms, floods and snow.

“It will also be used to take forward ground-breaking climate change modelling, unleashing the full potential of the Met Office’s global expertise in climate science.”

It is a continuation of its numerical modelling of the atmosphere becoming increasingly sophisticated and with it its demand for more power, which is where supercomputers, such as those designed by Control Data, IBM and Cray have been embraced.

Early supercomputers in the 1960s

According to IBM the term supercomputer was coined in the early 1960s to refer to computers that were more powerful than the fastest computers at the time. The first supercomputers by this definition were the IBM 7030 Stretch and the UNIVAC LARC developed for the US government.

They were followed in the mid-60s by the commercial supercomputers of US firms, Control Data Corporation and Cray Research with the latter’s founder, Seymour Cray, designing the CDC 6600 in 1964, acknowledged as the first commercially successful supercomputer.

The UK entered the supercomputer scene at the same time (after many years of research) with the Atlas in 1962 a joint project between Tom Kilburn and his team at University of Manchester (who was also instrumental in the launch of the Manchester Baby in 1948 – the world’s first stored-program computer), Ferranti International plc and the Plessey Co plc.

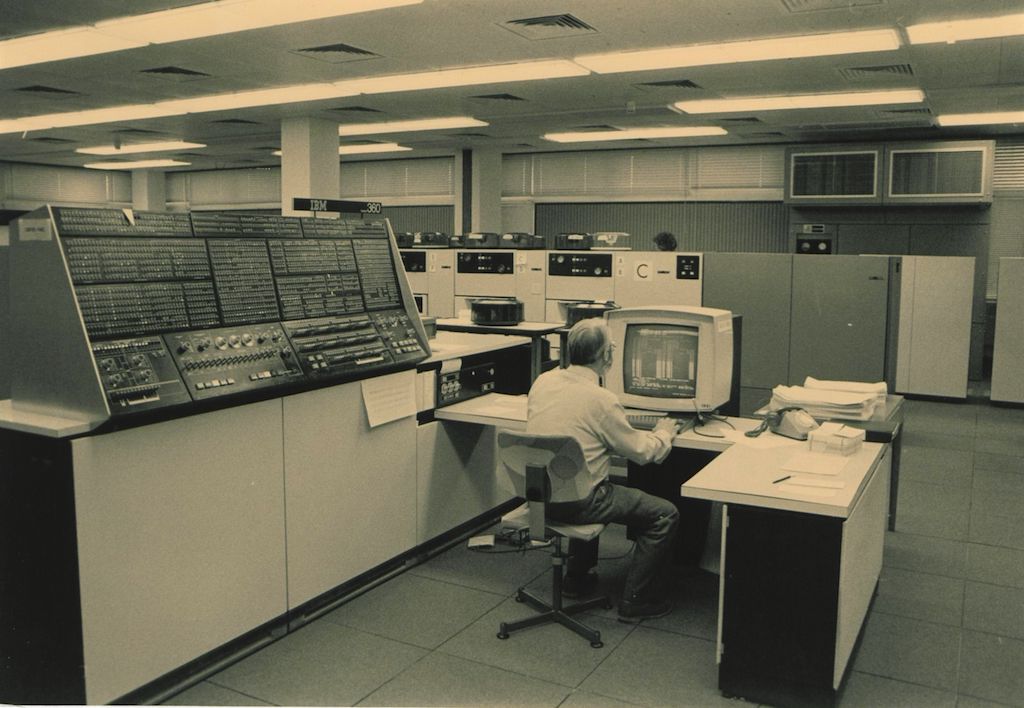

IBM 360/195 or Cosmos

In 1971 the Met Office purchased an IBM 360/195 or Cosmos, which allowed the 3-level model to be replaced by the 10-level model in 1972.

Met Office IT Fellow, Chris Little joined the national weather service in 1974 and has programmed some of the most powerful supercomputers and instigated the use of a wide range of graphic devices for forecasters and researchers.

“When I arrived in the Met Office the forecast program, the model of the atmosphere, was written in Assembler,” he says.

“The Assembler had been modified by the Met Office to get performance. They thought that the subroutine handling, where you’re saving the registers and then moving into an Assembler subroutine and then restoring the registers was much too slow, so we tweaked it so that it ran much, much faster.

“The other thing we discovered on the IBM 360/195 was that if you got your loops down to less than 32 bytes, it ran like a bat out of hell, because it stopped doing instruction ‘fetch’es and there was a very small buffer and it did everything from there and it was extremely fast. The engineers couldn’t believe that we were getting 99.9% CPU utilisation.”

Chris also took a three-year secondment at the European Centre for Medium-Range Weather Forecasts, which had just been established in 1975, where he used a CDC 6600.

“It was a pan-European organisation set up by all the national met services in Europe, not just the European Union and included Norway, Iceland, Turkey, and Cyprus, for example. ECMWF was tasked with doing one-week weather forecasts, nobody had ever done that before.

“[For a one week forecast] you’re going across the life cycle of a depression which only lasts three or four days and then it dies and another depression appears somewhere. So, you’re going across the generations of the meteorological systems. It was not at all clear that the science would enable that.”

Chris was involved in the data assimilation program together with Andrew Lorenc and Chris Clarke from the Met Office, Gorm Larsen from Denmark, Stefano Tibaldi from Italy and the head of the assimilation group, Ian Rutherford from Canada.

Cray-1 and Control Data’s Cyber 205

A year into his secondment, the machine was replaced with a Cray-1, which entailed several trips to Minneapolis St Paul and Chippewa Falls.

“What was different about the Cray-1, serial number 1, was, as it was the first one, a first working prototype, it didn’t have parity checking on the memory. There was no single error correction, double error parity checking on the memory, so if a cosmic ray went through you may get a wrong result.”

In 1982 the Met Office obtained what it calls its first supercomputer, Control Data’s Cyber 205. It was 100 times faster than the IBM and allowed the Met Office to begin climate modelling to support the world climate programme.

This tied in with Chris’s return from his secondment and his promotion as one of the Met Office’s senior programmers, which saw him involved in the replacement of the IBM mainframe with the Cyber 205.

“It had a speed advantage over the Cray because it could do floating point calculations in 32-bit precision, rather than the Cray’s 64 bit. The 32-bit precision is adequate for most well-conditioned meteorological calculations,” he says.

Catherine says that despite having added an additional IBM to the Cosmos to allow it to do some research in addition to the ten-level modelling, it started to run out of computational power.

World Climate Programme

“The reason for it came out of growing international interest in climatology; the start of the concept of climate change had arrived by now,” says Catherine. “It was agreed that the UK would play a significant role in the World Climate Programme, which was established in 1979.

“Obviously, that required going back to our database, known as Midas, and being able to process all of that data, for which they needed a machine that was an order of magnitude more powerful than the IBM.

“So that’s when [the Met Office] moved to supercomputers. The intention was it would be attached to the IBM mainframe, but the mainframe started to creak because it couldn’t quite keep up with what was required. From then on, there was continuous progress.”

Meteorological data processing

The Met Office’s Hybrid Cloud Solutions Architect, Martyn Catlow, explains more about meteorological data processing, how calculations from Fry Richardson’s time are still valid and how there were two ways of tackling data.

“One went back to the 1920s where marine data was punched onto Hollerith cards – that continued right up to the 1960s,” he says. “However, once technology became available in the form of electronic digital computers in the 1960s and ‘70s, the approach to data processing completely changed and it was done at scale.

“They started to not only punch data from records directly on specialised computer systems that allowed teams of people to key in information from forms by visually translating it and using a keyboard, into digitised form, but they’d also got this archive back to the 1920s of punched cards which they also digitised into electronic form by using card readers and so on. So there were two channels of data flow coming into the archives at that point.”

The data was used to look for diurnal and climatic patterns which had been processed since the 1850s.

“At that time, the Met Office, Professor Galton, Lord Kelvin (of temperature scale fame) and colleagues developed mechanical machines to process data, these were analogue computers and used do analysis of daily patterns and behaviour of climate over longer periods of time. That early work has fed into the modelling that we do today, in a very loose way.”

Global modelling

Today, the Met Office’s modelling is on a global basis. Martyn says: “If you imagine a grid that covers the entire surface of the earth, at each intersection of the verticals and horizontal lines of that grid you can forecast such parameters as wind speed, temperature, humidity and the whole range of other parameters in the very sophisticated models that we run.

“That’s done on a global scale, and in more detail in more condensed form and much higher resolution over certain areas of the world, like the UK and Europe, for example. That is presented in digitised form through those channels to the media and for specialist consumers like aviation and so on, and they all have different requirements.

“So, if you imagine different layers in the atmosphere, different grids on top of the surface grid going up for each point there, you’ve got a vertical representation of that data as well. There’s a whole load of data and the more you increase the resolution of those models, the more data you’ve got to process, and the aim is to process that in a minimal time so that people get a good and timely forecast out at the end.”

Unified Model

By 1991, with the need for yet more power, the Met Office introduced a Cray C-90, which was capable of implementing the Unified Model operationally.

Catherine says: “The Unified Model [means] that rather than having different models running so that one is giving you today’s forecast, one is looking a week out, and another one is looking at your climate model, you build them all into one so that your Unified Model is running everything from what’s going to happen in 24 hours to what might happen in 50 years, all at the same time. That mesh is not only getting finer, it’s also stretching out, so all of your models run together, which requires an awful lot of computing power.”

Two years later, the Met Office introduced its second Cray to provide yet more power. This was followed in 2016 by the Cray CX40 and the building of a ‘supercomputer’ building.

“When we brought in the new Cray, part of the concept was around having capacity not only to do our own forecasting and research, but to be able to make supercomputing facilities available for other organisations involved in climate research,” says Catherine.

“The Met Office maintained its two computer halls within its HQ, but built a second building where a third supercomputer was located. They’re all connected up so the data can flow wherever it needs to flow, but it was focussing on enabling research, not just for the Met Office, but for other organisations involved in climate research.”

Supercomputing 2020+

Since the mid-1950s when the Met Office purchased its own computer – a Ferranti Mercury Mk 1 known as Meteor – it has owned and run its own computers. However, the future model of technology for the Met Office announced in 2020 which is currently being developed in partnership with Microsoft, AWS and others, will see a shift towards the cloud.

The project is called Supercomputing 2020+ and is in generation one delivery with a generation two in the future. The shift is the result of a great deal of consideration of some complex trends in technology and science.

“Our contract with Microsoft, certainly in generation one, is that inside and under the covers there will still be a very recognisable supercomputer, the only real difference being that we will no longer host and operate it and that it will be tightly integrated with the wider Azure fabric and services,” says the Met Office’s Technology Director and Chief Information Officer, Charles Ewen.

“Our partner innovation and learning from generation 1 will be a springboard for generation 2, broadly timed for the late 2020s.”

“We didn’t have that fairly big power station at hand in ways that we could get requisite power from the grid in a resilient fashion; we need it to be resilient, because of its growing role as a factory. That just wasn’t available to us in the timescales and costs that we had available to us, so, there was no other choice but to put this out to market. This is one of many examples of the implication of exponentially growing scale.

“Generation one will be an HPE Cray EX generation supercomputer, fundamentally the same supercomputer that’s been implemented at Oak Ridge National Labs in the US for example, and at similar scales.

“That affords a new opportunity to further this general trend in IT in a way not seen before.

“It’s part of that sum that says it’s becoming too much of a thing when you start to think about the power station that drives it, about the physical space it would occupy, and so on and so on, it all gets exponentially bigger and more complex.”

Charles says that it is the Met Office’s job to do the fundamental and pioneering research and to convert that into models that represent the future state of the atmosphere, to turn those models into useful data that can be used by operational meteorologists for advice and guidance to its users, and in datasets that help people make better decisions.