The notions of data fairness and data quality are pivotal throughout the development of machine learning (ML) systems in various contexts, which typically encompass the interplay among different actors, including market actors, industry practitioners, regulators, policymakers, and the actual designers and developers of the ML systems themselves.

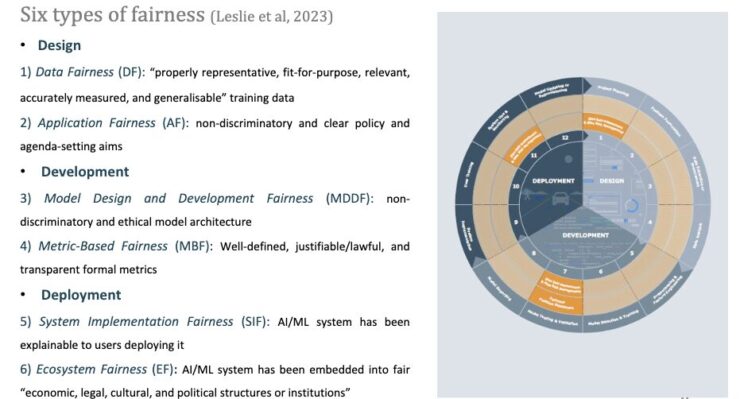

Data fairness generally alludes to the representativeness, suitability, relevance, accuracy, and generalisability of the dataset on which an ML, or, for that matter, any other artificial intelligence (AI), system has been trained and tested (Leslie et al, 2023).

As a concept, it is therefore intrinsically associated with that of data quality, the dataset’s “fitness for purpose” (The Government Data Quality Framework, 2020). For this presentation, we draw on findings from our ongoing UKRI-funded project about trustworthy ML approaches to energy consumption data with a view to discussing the notions of data fairness and data quality as they relate to the use of ML models within the energy sector.

The definition of trustworthiness we follow proposes that an AI/ML system, or model, can be trusted to the extent that it is lawful, robust, accountable, and ethical for both end-users and any other affected party (UKRI/Innovate UK, 2023). On this basis, our main goal has been to build a trustworthy assurance case (see Burr and Leslie, 2023), i.e. a case of trust establishment among those engaged in the development and deployment of ML models.

Accordingly, we focus on the actions (e.g. data cleaning, model training/re-training, “trial and error” methods) undertaken by a university-based (University of Sheffield) technical team developing the ML model to be used, considering the broader framework (sociotechnical, economic, policy, ethical, etc) within which the project consortium is set.

Based on semi-structured in-depth interviews with members of the technical team at different stages of the ML lifecycle, we suggest that trustworthiness emerges over time via a sequence of actions and continuous improvement, constituting a rather bottom-up and model-driven practice towards the goal of establishing trust.

In this presentation, our purpose is to elaborate on the different norms associated with the technical team’s actions at the ML lifecycle stages; how such norms could be juxtaposed with other normative criteria, including legal norms as well as ethical ones; and how the same norms might be influenced by the expectations, standards, and thresholds of other stakeholders, such as market actors.

Authors: Dr Jonathan Foster (Senior Lecturer, Information School, University of Sheffield, j.j.foster@sheffield.ac.uk); Dr George Zoukas (Postdoctoral Research Associate, Information School, University of Sheffield, g.zoukas@sheffield.ac.uk)

References

Burr, C. and Leslie, D. (2023). Ethical assurance: a practical approach to the responsible design, development, and deployment of data-driven technologies. AI and Ethics, 3(1), 73-98.

Leslie, D., Rincón, C., Briggs, M., Perini, A., Jayadeva, S., Borda, A., Bennett, SJ. Burr, C., Aitken, M., Katell, M., Fischer, C., Wong, J., and Kherroubi Garcia, I. (2023). AI Fairness in Practice. The Alan Turing Institute.

The Government Data Quality Framework (2020). Available at: https://www.gov.uk/government/publications/the-government-data-quality-framework/the-government-data-quality-framework

UKRI/Innovate UK (2023). Report on the Core Principles and Opportunities for Responsible and Trustworthy AI. Available at: https://iuk.ktn-uk.org/wp-content/uploads/2023/10/responsible-trustworthy-ai-report.pdf